White Paper: Platform Root of Trust Application on Intel Server

The white paper focuses on Wiwynn's implementation of Intel's Platform Firmware Resilience (PFR) in server systems, based on the NIST SP 800-193...

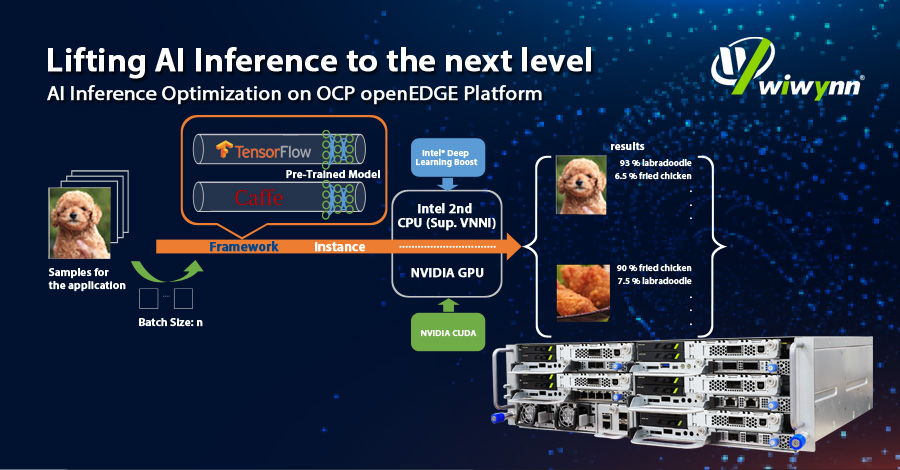

Looking for Edge AI Server for your new applications? What’s the most optimized solution? What parameters should take into consideration? Come to check Wiwynn’s latest whitepaper on AI inference optimization on OCP openEDGE platform.

See how Wiwynn EP100 assists you to catch up with the thriving edge application era and diverse AI inference workloads with powerful CPU and GPU inference acceleration!

Leave your contact information to download the whitepaper!

The white paper focuses on Wiwynn's implementation of Intel's Platform Firmware Resilience (PFR) in server systems, based on the NIST SP 800-193...

1 min read

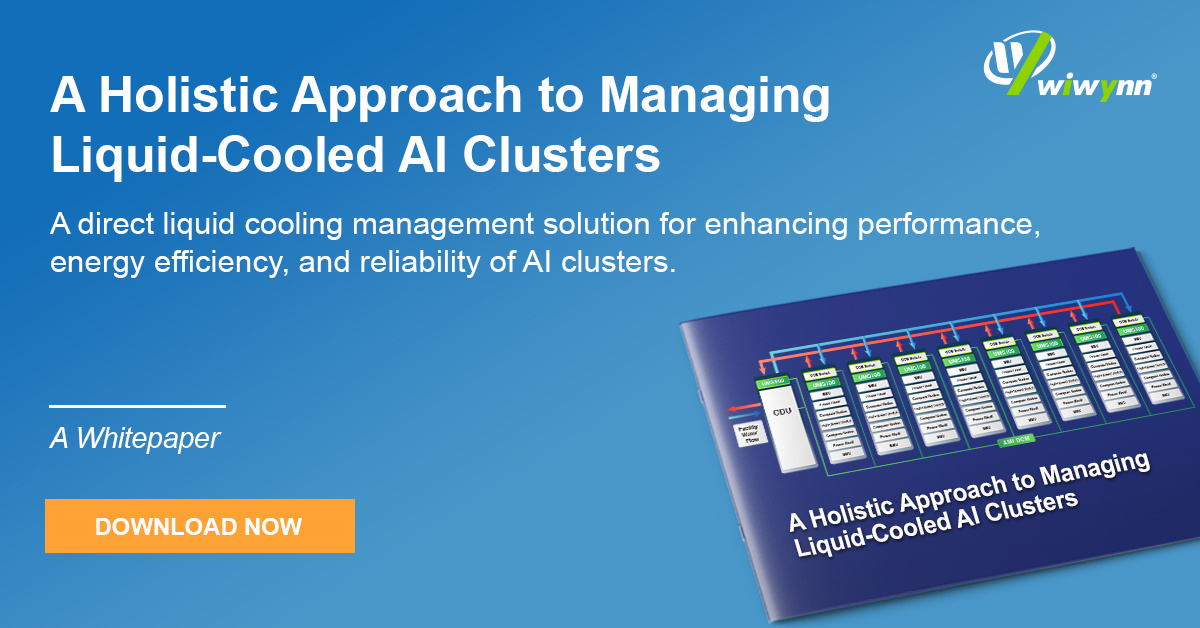

As thermal design power (TDP) for modern processors such as CPUs, GPUs, and TPUs exceeds 1 kW, traditional air cooling methods are proving...

The integration of advanced liquid cooling systems in AI clusters is essential for maintaining thermal stability and optimizing performance. Key...