3 min read

Recap: Wiwynn x NVIDIA GB300 NVL72 & Cooling at GTC 2025

Wiwynn Highlights NVIDIA GB300 NVL72 and Liquid-Cooling Innovation at GTC 2025!

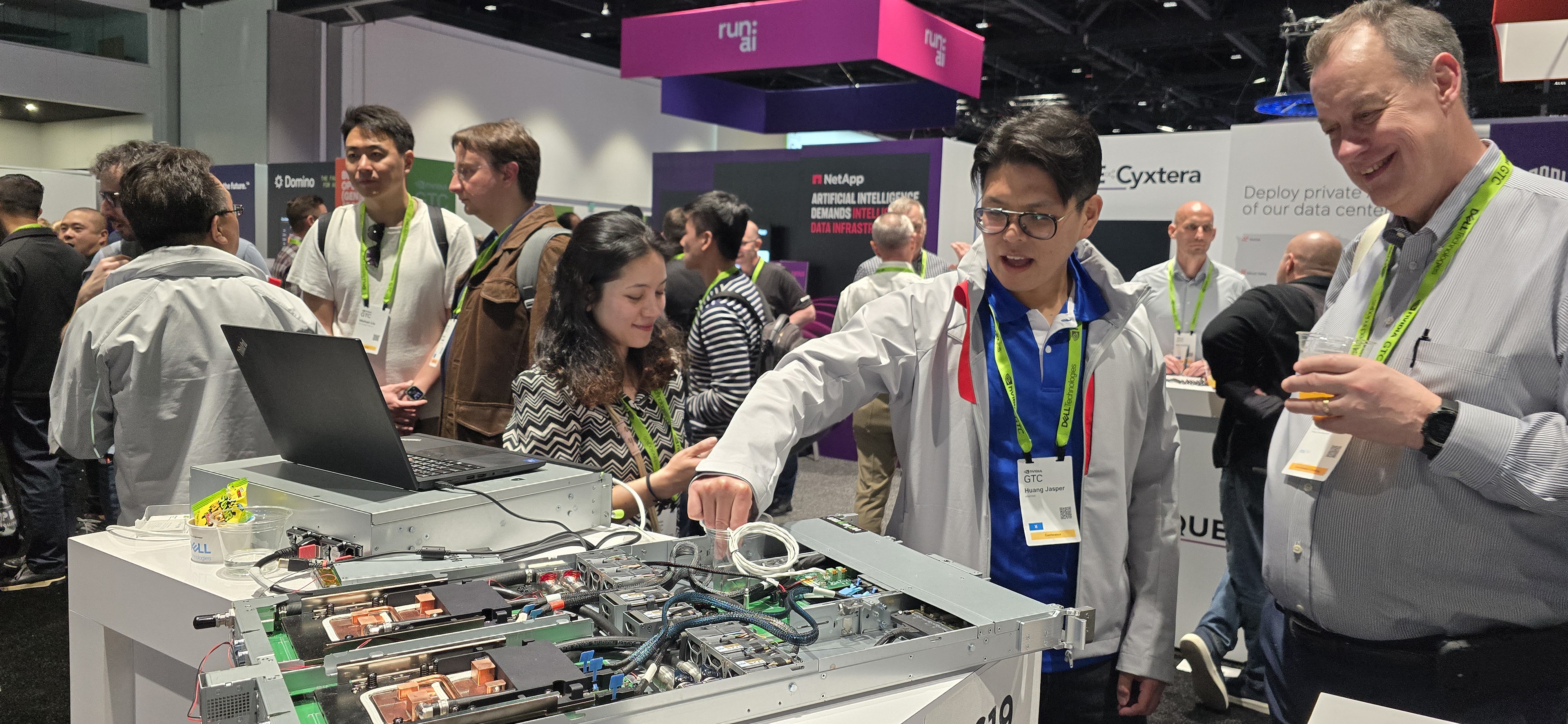

San Jose, Calif. – March 18, 2024 — Wiwynn, an innovative cloud IT infrastructure provider for data centers, is showcasing its latest rack-level AI computing solutions at NVIDIA GTC, a global AI conference running through March 21. Located at booth #1619, Wiwynn will spotlight its AI server racks based on the NVIDIA GB200 NVL72 system, along with its cutting-edge, rack-level, liquid-cooling management system, UMS100. The company aims to address the rising demand for massive computing power and advanced cooling solutions in the GenAI era.

As a close NVIDIA partner, Wiwynn is among the first in line with NVIDIA GB200 NVL72 readiness. The newly announced NVIDIA GB200 Grace™ Blackwell Superchip supports the latest NVIDIA Quantum-X800 InfiniBand and NVIDIA Spectrum™-X800 Ethernet platforms. This innovation catapults generative AI to trillion-parameter scale, delivering 30x faster real-time large language model inference, 25x lower TCO, and 25x less energy consumption compared to the previous generation of GPUs.

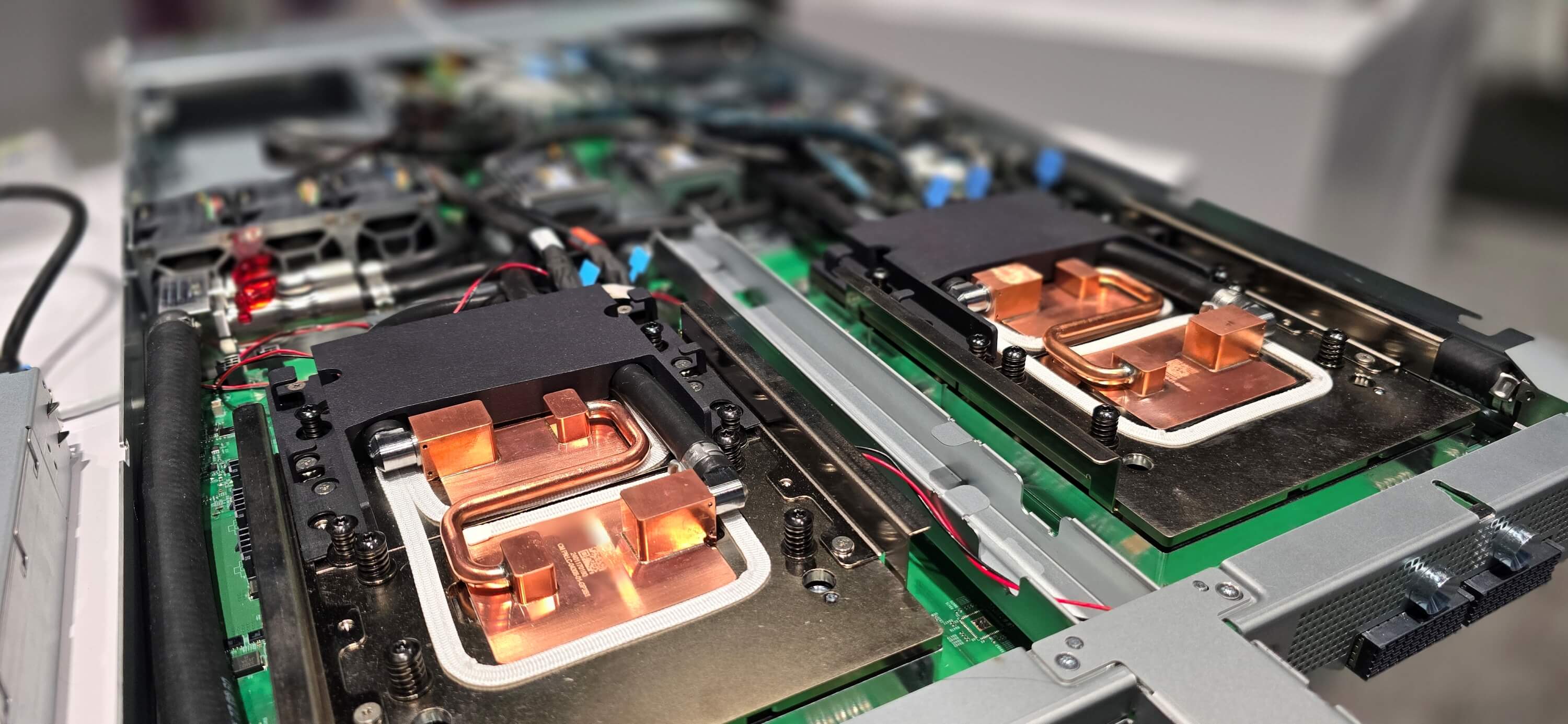

Wiwynn will build optimized, rack-level, liquid-cooled AI solutions by leveraging its extensive advantages in high-speed data transmission, power efficiency, system integration, and advanced cooling. It aims to meet customer demands for enhanced performance, scalability, and diversity in data center infrastructures.

Additionally, Wiwynn will demonstrate its universal liquid-cooling management system, the UMS100, in response to the growing need for liquid cooling in power-intensive AI/HPC computing pods. The UMS100 offers real-time monitoring, cooling energy optimization, rapid leak detection, and containment. It seamlessly integrates with existing data center management systems through the Redfish interface. With the support of industry-standard protocols, the UMS100 is compatible with different CDUs and sidecars. These features make the UMS100 an ideal solution for data centers seeking to enhance flexibility and adaptability in cooling architectures.

"We are excited to be one of the first in line with NVIDIA GB200 NVL72 readiness and showcase our latest innovations with NVIDIA at GTC 2024," said Dr. Sunlai Chang, CEO and President at Wiwynn. "With our GB200-enabled AI rack, we deliver unmatched computing power and ensure customizable adaptability to meet the diverse needs of scalability, flexibility, and efficient management in data centers. We will continue collaborating with NVIDIA to develop optimized and sustainable solutions for data centers in the GenAI era."

“Wiwynn delivers scalable, secure NVIDIA accelerated computing across various industries,” said Kaustubh Sanghani, vice president of GPU product management at NVIDIA. “With our NVIDIA GB200 NVL72 system, Wiwynn is working to innovate the modern data center and power a new era of computing.”

About Wiwynn

Wiwynn is an innovative cloud IT infrastructure provider of high-quality computing and storage products, plus rack solutions for leading data centers. We are committed to the vision of “unleash the power of digitalization; ignite the innovation of sustainability”. The Company aggressively invests in next-generation technologies to provide the best TCO (Total Cost of Ownership), workload and energy-optimized IT solutions from cloud to edge.

Get more information on Wiwynn’s Facebook, LinkedIn, and website.

3 min read

Wiwynn Highlights NVIDIA GB300 NVL72 and Liquid-Cooling Innovation at GTC 2025!

3 min read

San Jose, Cal. – March 18, 2025 — Wiwynn, an innovative cloud IT infrastructure provider for data centers, is showcasing its latest innovations at...

1 min read

Following the Board of Directors meeting today, Wiwynn announced Q4 2024 consolidated revenue of NT$115.614 billion, up 97.5% year-over-year....